I’m a researcher and second-year master’s student at Stanford’s CCRMA, working at the intersection of human–computer interaction, auditory neuroscience, and artificial intelligence. I’m currently at the Shape Lab and affiliated with the Neuromusic Lab, where I explore how generative systems, domain-specific programming languages, and brain-computer interfaces can enable new forms of creativity, well-being, and learning. My projects range from developing human–AI collaboration systems for spatial audio to creating AI thinking-partners for creative ideation.

I hold a bachelor’s degree in Computer Music and Psychoacoustics from Berklee College of Music. Before Stanford, I worked as a teaching assistant at MIT and as a Virtual Reality Developer at Prisms, a San Francisco–based EdTech startup focused on immersive STEM learning.

I share my random thoughts/reflections here.

research themes

Generative Agents:

Simulation, Thinking-Partners, and Co-Pilot systems for self-discovery and creativity

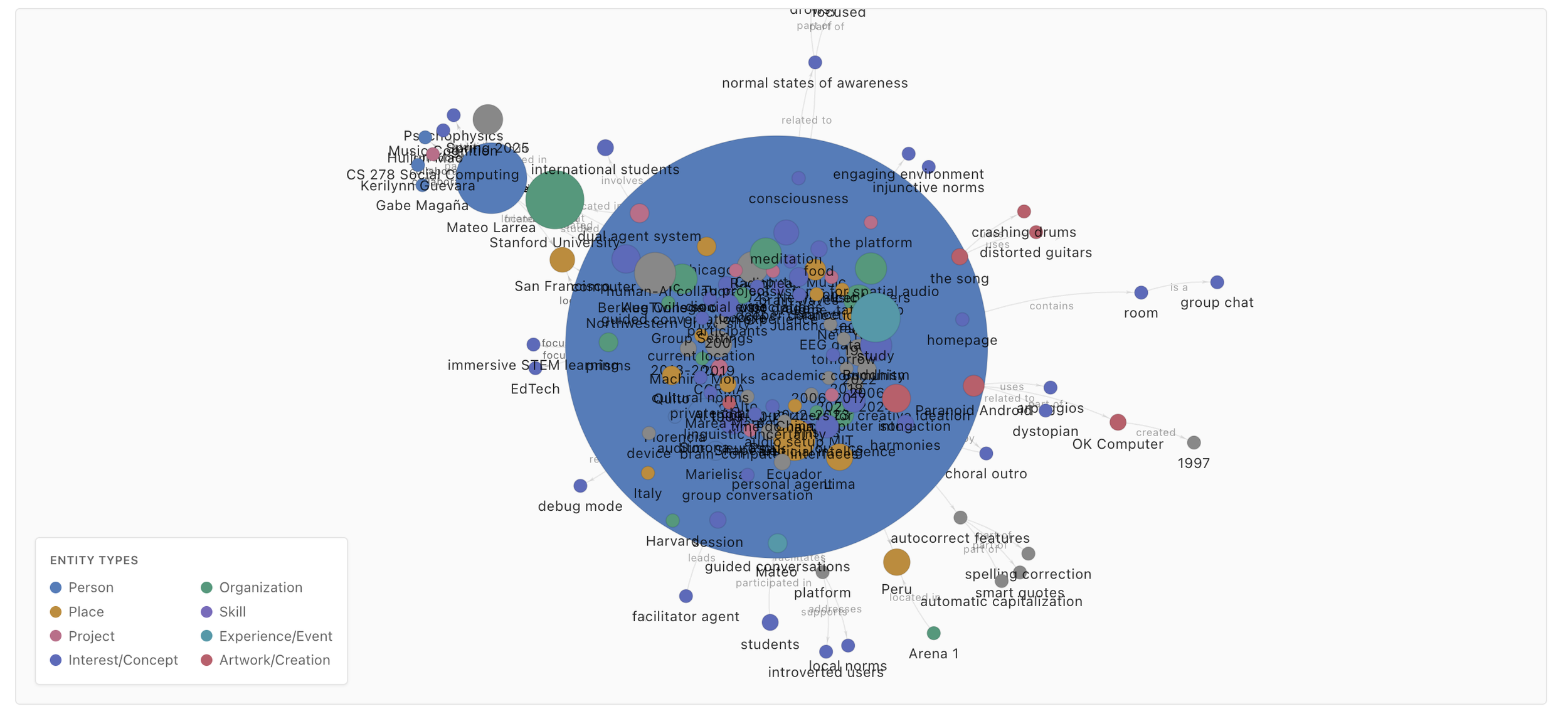

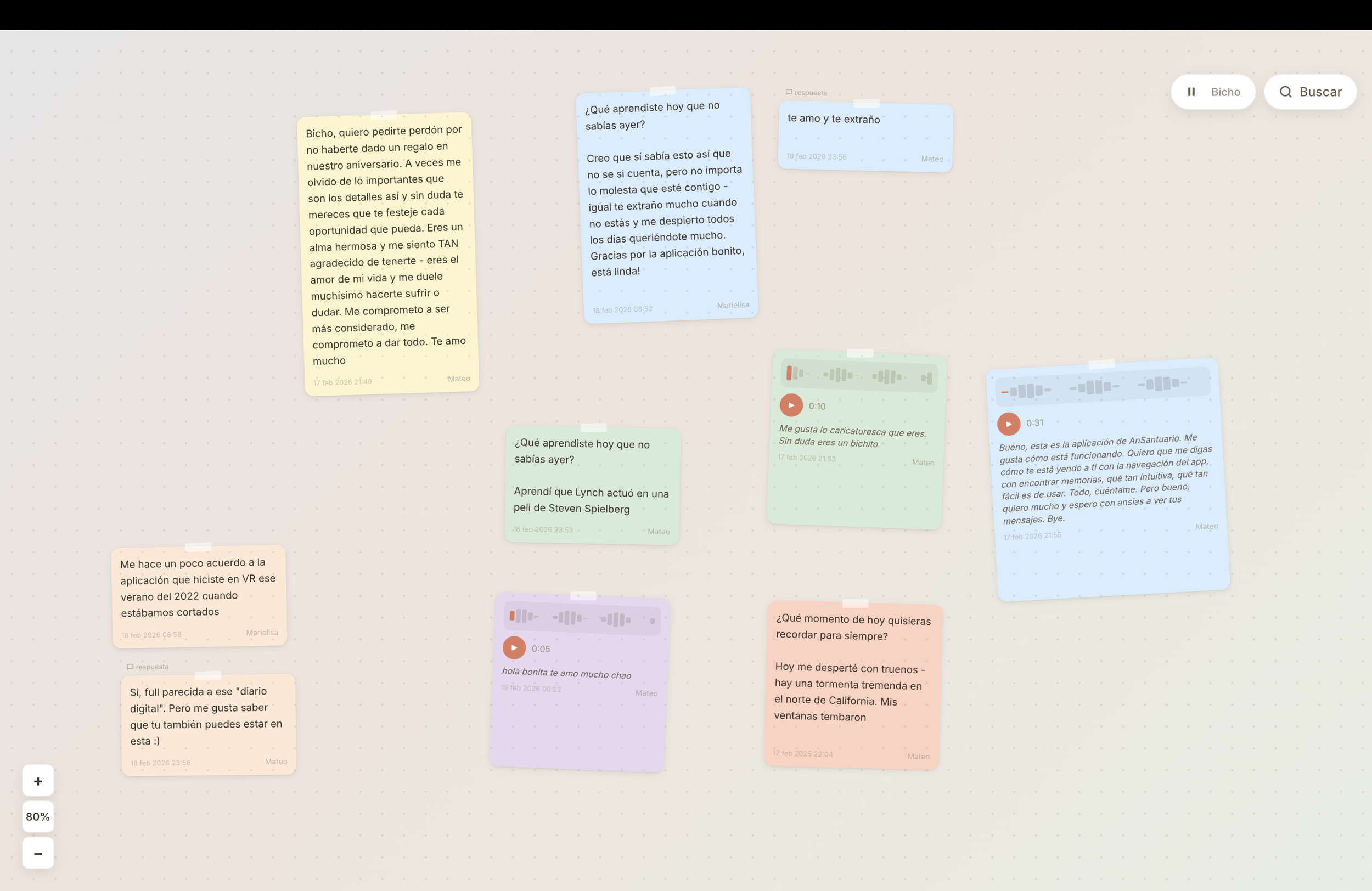

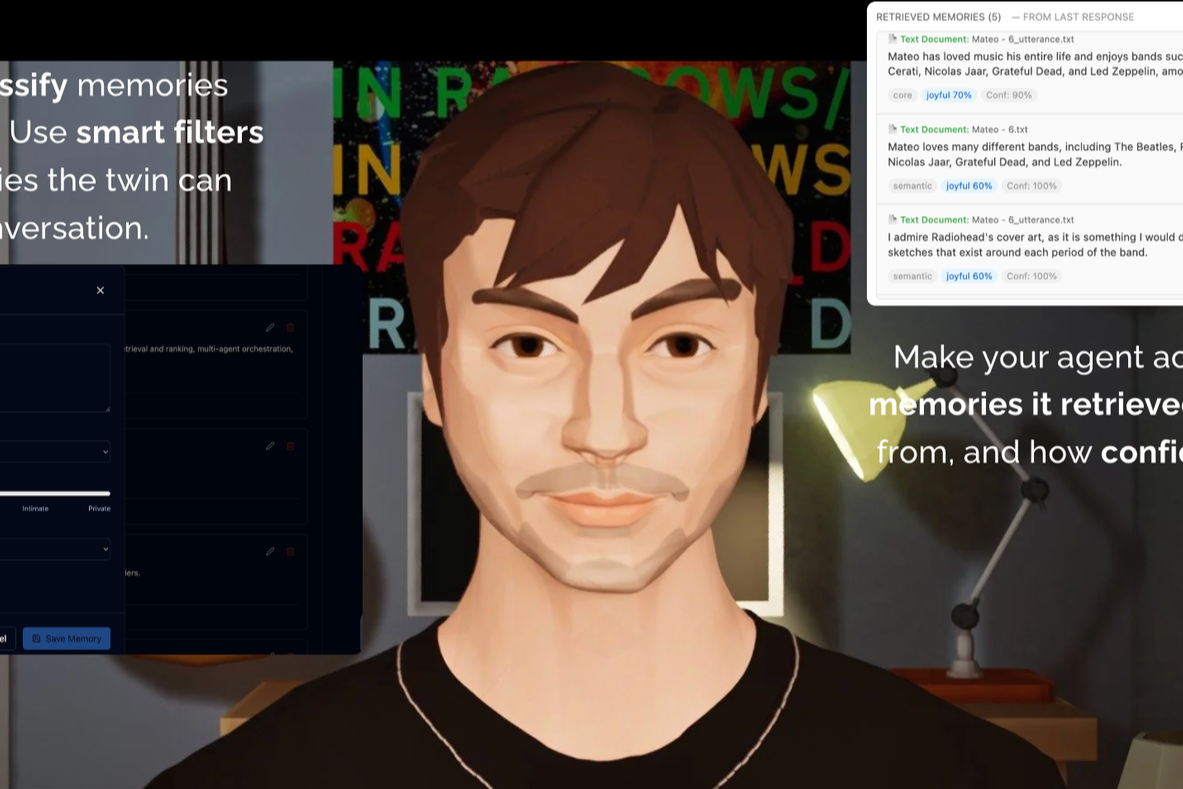

I design generative agents that act as reflective partners rather than automated personas. My work explores how memory, conversational style, and relational history can give agents continuity and coherence, allowing them to support self-understanding, creativity, and meaningful social interaction. These systems are built to preserve human intention and agency while offering new ways to think, experiment, and connect.

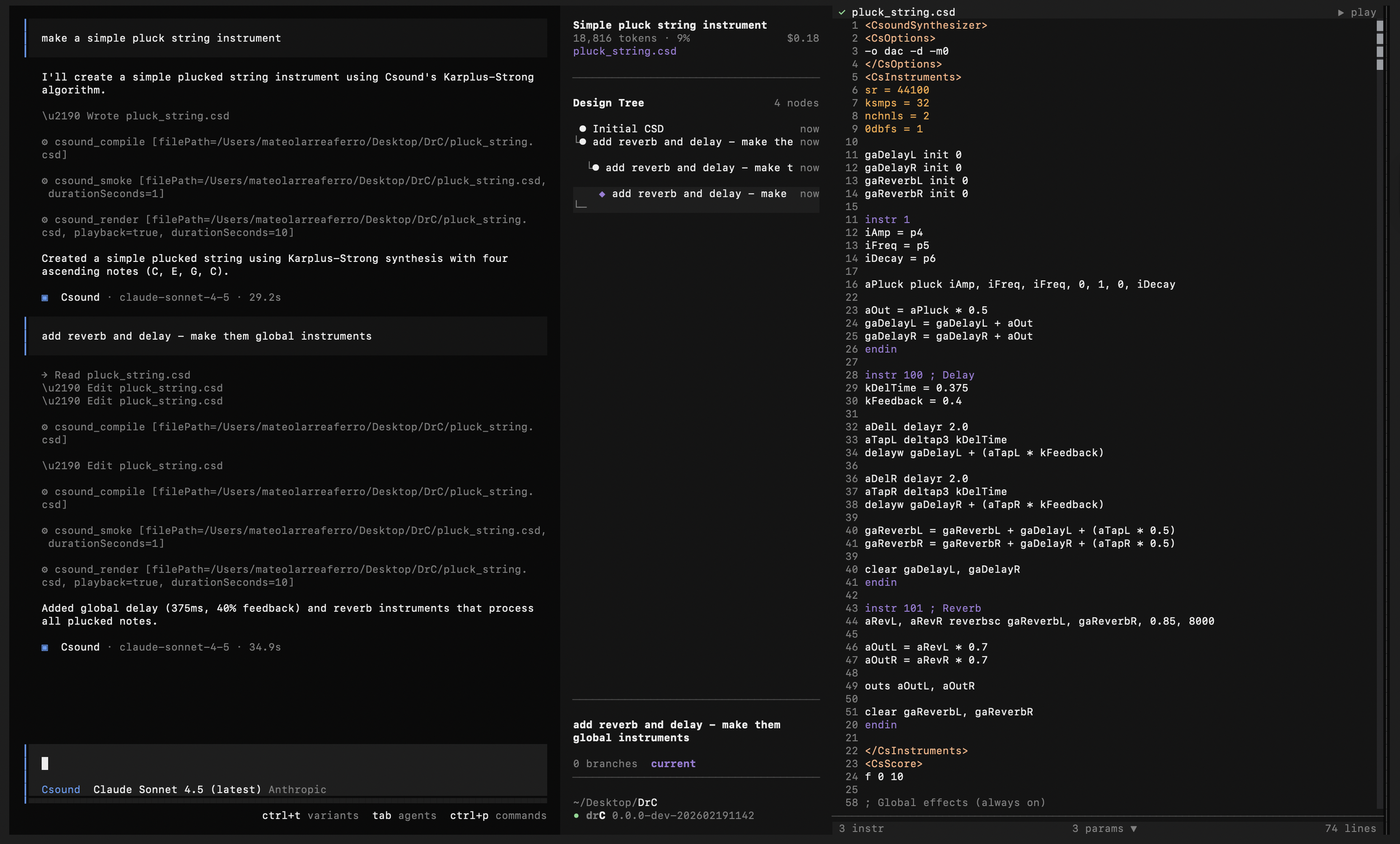

Human-in-the-Loop Generative Tools:

generative interfaces that reduce the technical burden while preserving creative agency

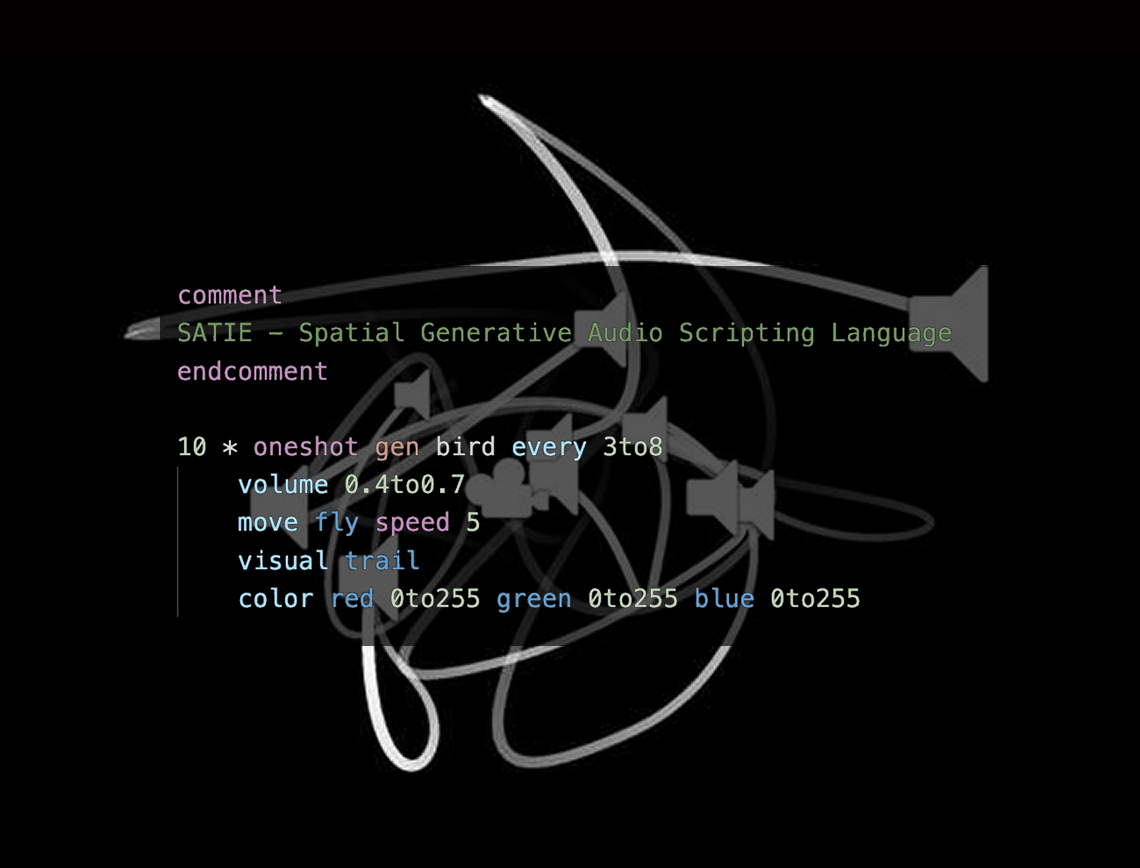

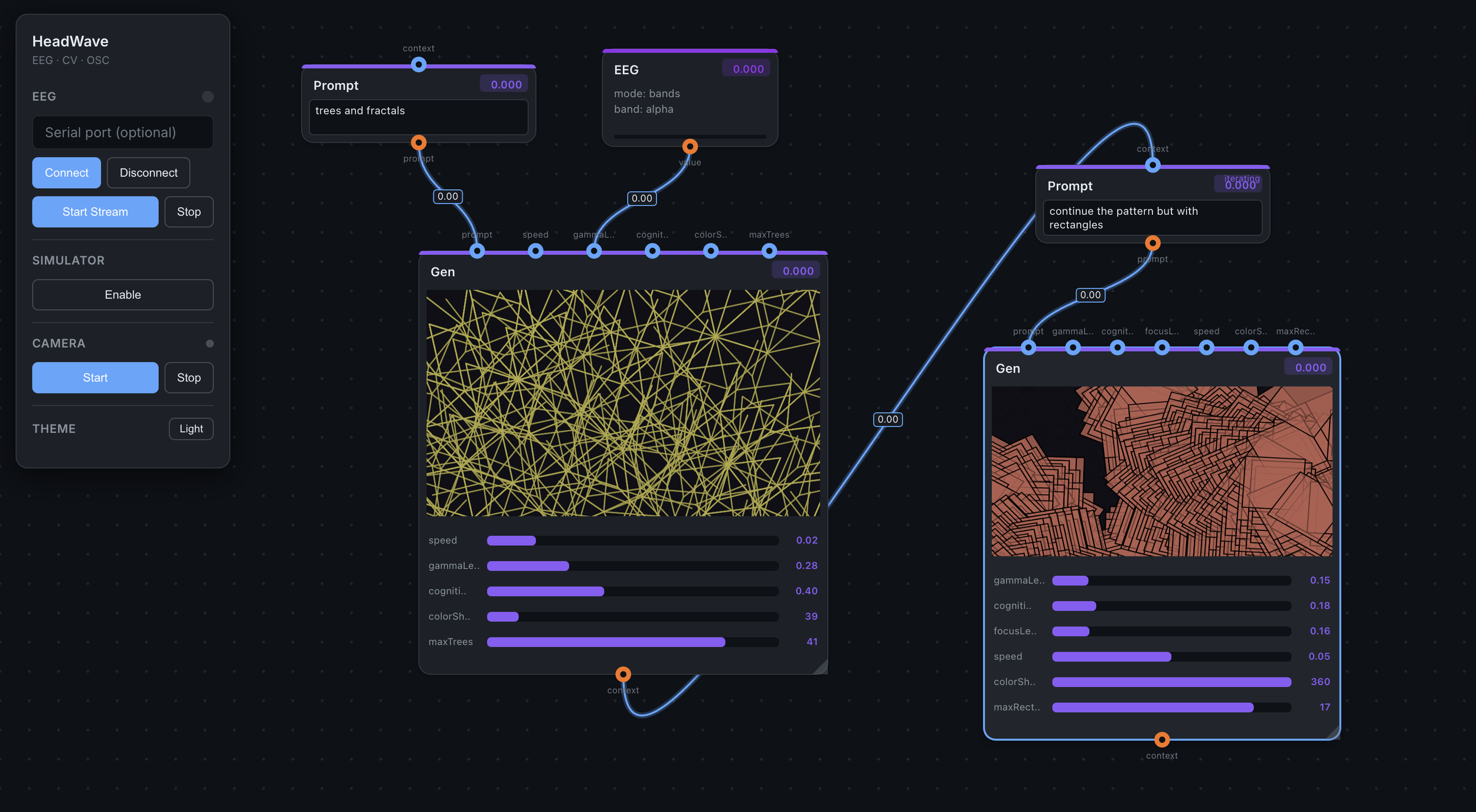

I build human-in-the-loop generative interfaces that preserve creative intention while reducing technical burden. My work focuses on structured, interpretable layers such as domain-specific languages, spatial abstractions, and gesture-based controls that let creators shape timing, variation, and behavior without surrendering authorship to automation. These systems keep humans at the center of the generative process, enabling faster iteration, deeper exploration, and a closer alignment between intention and outcome.

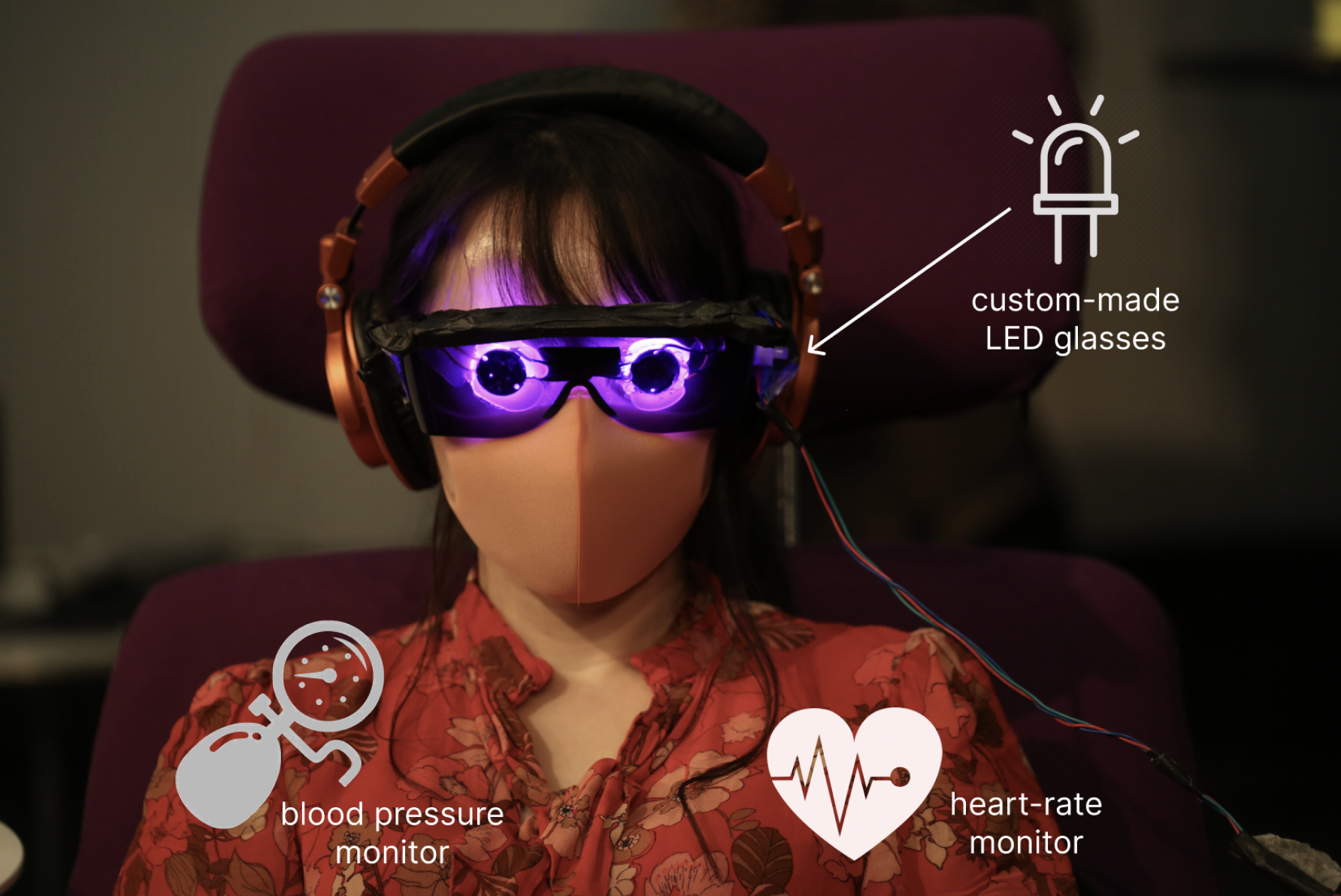

Bio-Graphic Notations:

Biofeedback systems for self-regulation through audiovisual mapping

I explore how internal physiological signals can become expressive material for art and self-reflection. Bio-Graphic Notations maps breath, tension, and affect into visual and sonic structures, giving people new ways to perceive their internal state. This work now extends to BCI devices and VR simulations that visualize perceptual patterns, including non-psychotic schizophrenia phenotypes, to support a clearer understanding of one's own experience.

Auditory Neuroscience, Psychoacoustics, and Learning

EEG studies, auditory statistical learning, multimodal retrieval, and music cognition

I study how humans learn structure in sound and form expectations through exposure. This includes statistical learning work with the Bohlen–Pierce scale, comparisons of how jazz and classical musicians internalize pattern and variation, and embodied learning tools like Prisms that use grammars to teach through interaction. I also conduct EEG studies that examine auditory imagination, mismatch responses, and the neural signatures of perceptual learning